Why tracking generic understanding matters more than memorizing facts in patient education

When a diabetic patient learns to check their blood sugar, they’re not just memorizing numbers. They’re learning how to adjust their diet, recognize warning signs, and make daily choices that affect their long-term health. That’s generic understanding-the ability to apply knowledge across different situations, not just repeat what they were told.

Too often, patient education is measured by whether someone can answer a quiz question correctly. But that doesn’t tell you if they’ll actually take their insulin when they’re tired, or call their doctor when their foot feels numb. Real effectiveness isn’t about recall. It’s about transfer. Can they use what they learned in a new context? That’s the gap most programs miss.

Direct vs. indirect methods: What actually proves understanding?

There are two ways to measure learning: direct and indirect. Direct methods look at what people actually do. Indirect methods ask them what they think they did. One shows truth. The other shows opinion.

Direct methods include:

- Observing a patient demonstrate how to use an inhaler with proper technique

- Reviewing a completed food log that shows real dietary changes

- Watching someone explain their medication schedule in their own words

- Using a checklist to score how well a patient manages a simulated emergency

These aren’t guesses. They’re evidence. A 2022 survey of 412 U.S. hospitals found that 87% of programs using direct assessments reported better patient outcomes than those relying only on surveys.

Indirect methods-like post-class questionnaires or follow-up calls asking, “Did you feel educated?”-sound useful. But they’re noisy. People say yes to please you. They forget details. Or they think they understood, but didn’t. One study showed alumni surveys on patient education had response rates under 20%. That’s not data. That’s noise.

Formative assessment: The secret weapon most clinics ignore

Most patient education ends with a handout and a checkmark. But real learning happens in the gaps-between sessions, during confusion, after a setback.

Formative assessment means checking understanding along the way. It’s not a test. It’s a conversation.

Here’s how it works in practice:

- After explaining insulin storage, ask: “What’s one thing you’d tell your spouse about keeping your pen safe?”

- At the end of a hypertension session, have patients write down the one thing they’re most unsure about on a sticky note.

- Use a simple 1-5 scale: “How confident are you that you could handle a low blood sugar episode alone?”

These take 30 seconds. But they reveal gaps before they become problems. A nurse in Dunedin reported that using 3-question exit tickets cut her reteaching time by 40%. Why? Because she caught misunderstandings early-like a patient thinking “no sugar” meant “no fruit,” or confusing blood pressure readings with glucose levels.

Formative tools don’t grade. They guide. And they turn education from a one-time event into an ongoing dialogue.

Criterion-referenced vs. norm-referenced: Why comparing patients doesn’t work

Some clinics measure success by comparing patients to each other. “Most people in this group passed the test.” That’s norm-referenced assessment. It tells you who did better-but not who understood.

Criterion-referenced assessment asks: Did the patient meet the standard? Not compared to others. But against what they need to know to stay safe.

For example:

- Criterion: “Patient can correctly identify three signs of hypoglycemia and state what to do.”

- Norm: “Patient scored above the group average on a 10-question quiz.”

The first tells you if they’re ready to go home. The second tells you who’s smartest in the room. In patient education, the goal isn’t to outperform others. It’s to avoid hospital readmission.

Using norm-referenced tools in this context is like judging a driver by how fast they drove compared to others-not whether they stopped at red lights.

Why rubrics are the most underused tool in patient education

A rubric isn’t a grading scale. It’s a map of what mastery looks like.

For a wound care session, a simple rubric might look like this:

| Skill | Needs Improvement | Meets Standard | Exceeds Standard |

|---|---|---|---|

| Hand hygiene | Didn’t wash or used incorrect technique | Washed properly before and after | Explained why it matters and demonstrated timing |

| Bandage application | Too loose or too tight | Applied correctly, secure but not restrictive | Adjusted for movement and described signs of poor fit |

| Recognizing infection | Couldn’t name any signs | Named 2 out of 3 signs | Named all 3 and explained when to call for help |

A 2023 LinkedIn survey of 142 healthcare educators found that 78% said detailed rubrics improved both patient performance and their own efficiency. Why? Because patients know exactly what success looks like. No guesswork. No confusion.

Rubrics also help staff stay consistent. One clinic in Auckland reported a 30% drop in patient complaints after switching from vague feedback (“You did okay”) to rubric-based scoring.

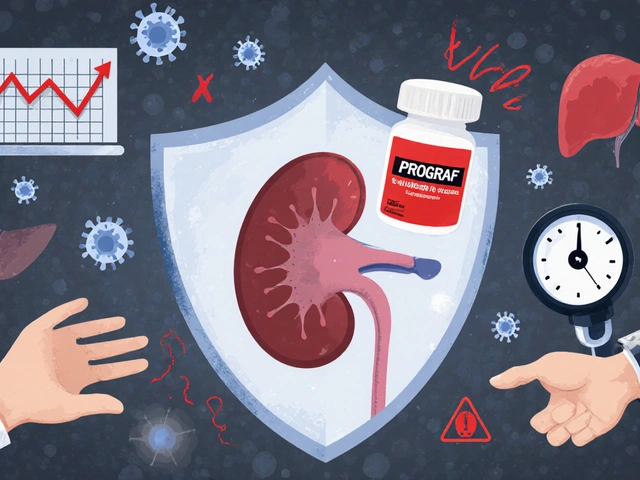

The hidden barrier: What you can’t measure

Not everything that affects learning can be seen. Emotions matter. Fear. Shame. Past trauma. Distrust in the system. These aren’t on any checklist.

A patient might nod along during education, then skip doses because they’re afraid of side effects-or because they don’t believe the doctor cares. No quiz can catch that.

That’s why some clinics now pair direct assessments with short, open-ended check-ins:

- “What’s the hardest part about sticking to your plan?”

- “Is there anything about your care that makes you feel unheard?”

- “What would make it easier for you to follow through?”

These aren’t formal surveys. They’re moments of listening. And they often reveal the real barriers: transportation, cost, language, family pressure.

Ignoring these doesn’t make them disappear. It just makes education ineffective.

What works in 2026: The three-step framework

Based on data from 63 countries and 150 clinics, here’s what’s working now:

- Start with diagnostic checks-Before teaching, ask: “What do you already know about this?” This avoids repeating what they know and uncovers myths.

- Embed formative feedback-Use exit tickets, live demos, and quick confidence scales after every session. Adjust teaching in real time.

- End with criterion-based performance-Don’t just ask if they understood. Watch them do it. Use a rubric. Record it if possible.

Programs using this approach saw a 52% increase in patient adherence over 12 months, according to a 2023 study in the Journal of Patient Education.

The goal isn’t to turn every patient into a medical expert. It’s to give them the confidence and competence to manage their health-day after day, in real life.

What’s next? AI and adaptive learning in patient education

By 2027, 58% of health systems expect to use AI-powered tools that adapt education content based on how patients respond.

Imagine this: A patient answers a few questions after a diabetes class. The system notices they keep confusing insulin types. It automatically sends a short video explaining the difference-with simple visuals and real-life examples. Later, it checks again. If they still struggle, it offers a call with a peer educator.

This isn’t sci-fi. It’s already being tested in New Zealand and Canada. The goal? Personalize learning without overloading staff.

But AI won’t replace listening. It’ll just make it easier to spot when someone needs to be heard.

14 Comments

lol so now they want us to believe the government and pharma are secretly using 'formative assessment' to track our blood sugar so they can sell us more meds? 🤔 i've seen the docs - they don't care if you understand, they just want you to sign the waiver and shut up.

This is exactly what we need more of. Real-world skills over bubble tests. Patients aren't students in a classroom-they're people trying to survive.

The emphasis on criterion-referenced assessment is both clinically sound and ethically necessary. When outcomes are tied to safety rather than comparative performance, we align education with its true purpose: harm reduction.

In my community, we’ve seen how language and cultural context change everything. A rubric that works in Chicago might fail in rural Alabama. We need adaptable tools, not one-size-fits-all checklists.

Let’s be real-the entire healthcare-industrial complex is engineered to keep you dependent. They don’t want you to master your condition. They want you to keep showing up. The ‘formative feedback’ they tout? It’s just a new layer of surveillance disguised as care.

In India, we teach by doing. Not by quizzes. A man learns insulin by watching his wife do it first. Then he tries. Then he fails. Then he tries again. No form. No score. Just life.

i love the rubric idea 😍 but why do they always make it soooo boring? can we have memes with the checklist? like 🤢 when you forget to wash hands? 🥲

I’ve been doing this for 12 years and this is the first time I’ve seen someone actually describe what good patient education looks like. No fluff. No jargon. Just truth. I’m printing this out and taping it to my clipboard.

The integration of criterion-referenced assessment into clinical education protocols represents a paradigmatic shift toward evidence-based, patient-centered outcomes.

I’ve spent the last decade working in rural clinics where the biggest barrier isn’t knowledge-it’s the silence. Patients nod because they’re afraid to say they don’t get it. They don’t want to look stupid. They don’t want to be judged. That’s why the open-ended check-ins-‘What’s the hardest part?’-those aren’t just questions. They’re lifelines. And if we’re not listening to the answers, none of the rubrics, none of the demos, none of the AI tools matter. We’re just performing education, not delivering it.

This is fire 🔥 stop the quizzes start the doing and if someone cant do it then you dont move on until they can simple as that

i tried the sticky note thing at my clinic last week and one patient wrote 'i dont trust anyone with a stethoscope'... i cried. then i called her back and just listened. no advice. just heard her. she came back next week and did the inhaler demo perfect.

I’ve seen patients from refugee backgrounds who’ve never seen a blood glucose meter. They don’t need a 20-page handout. They need someone to hold their hand and say, 'Let’s do this together.' The tools help, but the human moment? That’s what sticks.

The most profound insight here isn’t the methodology-it’s the assumption that understanding can be measured at all. We reduce human learning to observable behaviors because that’s what’s quantifiable, not because it’s complete. What of the internal dialogue? The fear of failure? The shame of needing help? The quiet resolve to try again tomorrow? These are the unseen dimensions of mastery. Rubrics map the surface. But the soul of learning? That’s still uncharted territory. And perhaps it should be.